Co-author: @pboguslawski

"registration success email" is only used for notifying a user that "you

have a new account now" when the account is created by admin manually.

When a user uses external auth source, they already knows that they has

the account, so do not send such email.

Co-authored-by: Giteabot <teabot@gitea.io>

The `GetAllCommits` endpoint can be pretty slow, especially in repos

with a lot of commits. The issue is that it spends a lot of time

calculating information that may not be useful/needed by the user.

The `stat` param was previously added in #21337 to address this, by

allowing the user to disable the calculating stats for each commit. But

this has two issues:

1. The name `stat` is rather misleading, because disabling `stat`

disables the Stat **and** Files. This should be separated out into two

different params, because getting a list of affected files is much less

expensive than calculating the stats

2. There's still other costly information provided that the user may not

need, such as `Verification`

This PR, adds two parameters to the endpoint, `files` and `verification`

to allow the user to explicitly disable this information when listing

commits. The default behavior is true.

1. Remove unused fields/methods in web context.

2. Make callers call target function directly instead of the light

wrapper like "IsUserRepoReaderSpecific"

3. The "issue template" code shouldn't be put in the "modules/context"

package, so move them to the service package.

---------

Co-authored-by: Giteabot <teabot@gitea.io>

# ⚠️ Breaking

Many deprecated queue config options are removed (actually, they should

have been removed in 1.18/1.19).

If you see the fatal message when starting Gitea: "Please update your

app.ini to remove deprecated config options", please follow the error

messages to remove these options from your app.ini.

Example:

```

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].ISSUE_INDEXER_QUEUE_TYPE`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].UPDATE_BUFFER_LEN`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [F] Please update your app.ini to remove deprecated config options

```

Many options in `[queue]` are are dropped, including:

`WRAP_IF_NECESSARY`, `MAX_ATTEMPTS`, `TIMEOUT`, `WORKERS`,

`BLOCK_TIMEOUT`, `BOOST_TIMEOUT`, `BOOST_WORKERS`, they can be removed

from app.ini.

# The problem

The old queue package has some legacy problems:

* complexity: I doubt few people could tell how it works.

* maintainability: Too many channels and mutex/cond are mixed together,

too many different structs/interfaces depends each other.

* stability: due to the complexity & maintainability, sometimes there

are strange bugs and difficult to debug, and some code doesn't have test

(indeed some code is difficult to test because a lot of things are mixed

together).

* general applicability: although it is called "queue", its behavior is

not a well-known queue.

* scalability: it doesn't seem easy to make it work with a cluster

without breaking its behaviors.

It came from some very old code to "avoid breaking", however, its

technical debt is too heavy now. It's a good time to introduce a better

"queue" package.

# The new queue package

It keeps using old config and concept as much as possible.

* It only contains two major kinds of concepts:

* The "base queue": channel, levelqueue, redis

* They have the same abstraction, the same interface, and they are

tested by the same testing code.

* The "WokerPoolQueue", it uses the "base queue" to provide "worker

pool" function, calls the "handler" to process the data in the base

queue.

* The new code doesn't do "PushBack"

* Think about a queue with many workers, the "PushBack" can't guarantee

the order for re-queued unhandled items, so in new code it just does

"normal push"

* The new code doesn't do "pause/resume"

* The "pause/resume" was designed to handle some handler's failure: eg:

document indexer (elasticsearch) is down

* If a queue is paused for long time, either the producers blocks or the

new items are dropped.

* The new code doesn't do such "pause/resume" trick, it's not a common

queue's behavior and it doesn't help much.

* If there are unhandled items, the "push" function just blocks for a

few seconds and then re-queue them and retry.

* The new code doesn't do "worker booster"

* Gitea's queue's handlers are light functions, the cost is only the

go-routine, so it doesn't make sense to "boost" them.

* The new code only use "max worker number" to limit the concurrent

workers.

* The new "Push" never blocks forever

* Instead of creating more and more blocking goroutines, return an error

is more friendly to the server and to the end user.

There are more details in code comments: eg: the "Flush" problem, the

strange "code.index" hanging problem, the "immediate" queue problem.

Almost ready for review.

TODO:

* [x] add some necessary comments during review

* [x] add some more tests if necessary

* [x] update documents and config options

* [x] test max worker / active worker

* [x] re-run the CI tasks to see whether any test is flaky

* [x] improve the `handleOldLengthConfiguration` to provide more

friendly messages

* [x] fine tune default config values (eg: length?)

## Code coverage:

Close#24213

Replace #23830

#### Cause

- Before, in order to making PR can get latest commit after reopening,

the `ref`(${REPO_PATH}/refs/pull/${PR_INDEX}/head) of evrey closed PR

will be updated when pushing commits to the `head branch` of the closed

PR.

#### Changes

- For closed PR , won't perform these behavior: insert`comment`, push

`notification` (UI and email), exectue

[pushToBaseRepo](7422503341/services/pull/pull.go (L409))

function and trigger `action` any more when pushing to the `head branch`

of the closed PR.

- Refresh the reference of the PR when reopening the closed PR (**even

if the head branch has been deleted before**). Make the reference of PR

consistent with the `head branch`.

The `..` should be covered by TestUserTitleToWebPath.

Otherwise, if the random string is "..", it causes unnecessary failure

in TestUserWebGitPathConsistency

Since the login form label for user_name unconditionally displays

`Username or Email Address` for the `user_name` field, bring matching

LDAP filters to more prominence in the documentation/placeholders.

Signed-off-by: Gary Moon <gary@garymoon.net>

Partially for #24457

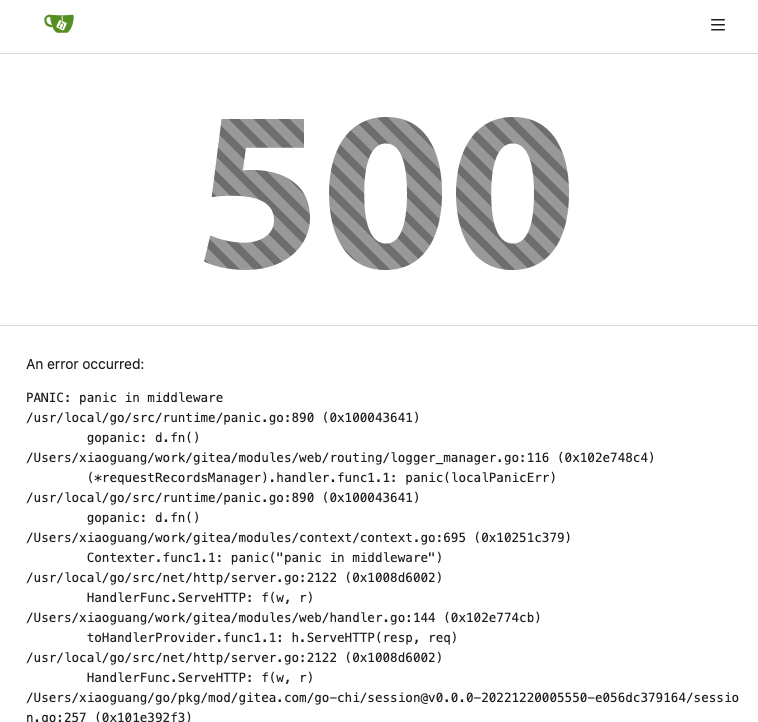

Major changes:

1. The old `signedUserNameStringPointerKey` is quite hacky, use

`ctx.Data[SignedUser]` instead

2. Move duplicate code from `Contexter` to `CommonTemplateContextData`

3. Remove incorrect copying&pasting code `ctx.Data["Err_Password"] =

true` in API handlers

4. Use one unique `RenderPanicErrorPage` for panic error page rendering

5. Move `stripSlashesMiddleware` to be the first middleware

6. Install global panic recovery handler, it works for both `install`

and `web`

7. Make `500.tmpl` only depend minimal template functions/variables,

avoid triggering new panics

Screenshot:

<details>

</details>

Add ntlm authentication support for mail

use "github.com/Azure/go-ntlmssp"

---------

Co-authored-by: yangtan_win <YangTan@Fitsco.com.cn>

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: @awkwardbunny

This PR adds a Debian package registry. You can follow [this

tutorial](https://www.baeldung.com/linux/create-debian-package) to build

a *.deb package for testing. Source packages are not supported at the

moment and I did not find documentation of the architecture "all" and

how these packages should be treated.

---------

Co-authored-by: Brian Hong <brian@hongs.me>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

There was only one `IsRepositoryExist` function, it did: `has && isDir`

However it's not right, and it would cause 500 error when creating a new

repository if the dir exists.

Then, it was changed to `has || isDir`, it is still incorrect, it

affects the "adopt repo" logic.

To make the logic clear:

* IsRepositoryModelOrDirExist

* IsRepositoryModelExist

Fix https://github.com/go-gitea/gitea/pull/24362/files#r1179095324

`getAuthenticatedMeta` has checked them, these code are duplicated one.

And the first invokation has a wrong permission check. `DownloadHandle`

should require read permission but not write.

> The scoped token PR just checked all API routes but in fact, some web

routes like `LFS`, git `HTTP`, container, and attachments supports basic

auth. This PR added scoped token check for them.

---------

Signed-off-by: jolheiser <john.olheiser@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The Infinite Monkey Random Typing catches a bug, inconsistent wiki path

converting.

Close#24276

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

- Update all tool dependencies to latest tag

- Remove unused errcheck, it is part of golangci-lint

- Include main.go in air

- Enable wastedassign again now that it's

[generics-compatible](https://github.com/golangci/golangci-lint/pull/3689)

- Restructured lint targets to new `lint-*` namespace

Before, there was a `log/buffer.go`, but that design is not general, and

it introduces a lot of irrelevant `Content() (string, error) ` and

`return "", fmt.Errorf("not supported")` .

And the old `log/buffer.go` is difficult to use, developers have to

write a lot of `Contains` and `Sleep` code.

The new `LogChecker` is designed to be a general approach to help to

assert some messages appearing or not appearing in logs.

Close#24195

Some of the changes are taken from my another fix

f07b0de997

in #20147 (although that PR was discarded ....)

The bug is:

1. The old code doesn't handle `removedfile` event correctly

2. The old code doesn't provide attachments for type=CommentTypeReview

This PR doesn't intend to refactor the "upload" code to a perfect state

(to avoid making the review difficult), so some legacy styles are kept.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

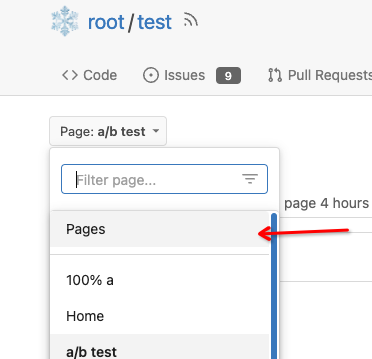

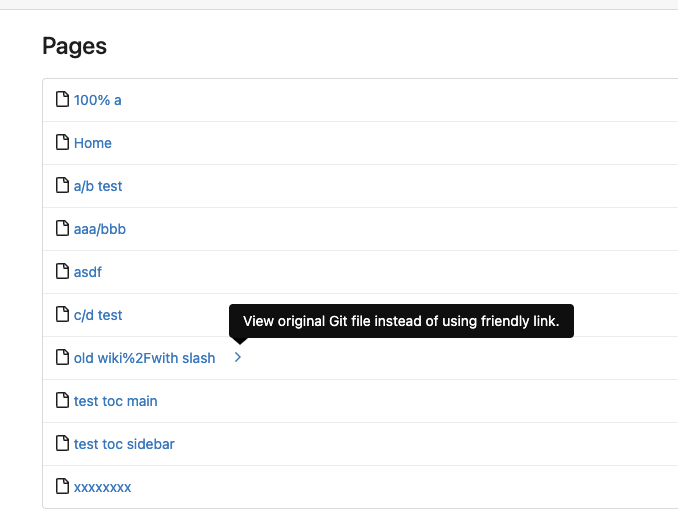

Close#7570

1. Clearly define the wiki path behaviors, see

`services/wiki/wiki_path.go` and tests

2. Keep compatibility with old contents

3. Allow to use dashes in titles, eg: "2000-01-02 Meeting record"

4. Add a "Pages" link in the dropdown, otherwise users can't go to the

Pages page easily.

5. Add a "View original git file" link in the Pages list, even if some

file names are broken, users still have a chance to edit or remove it,

without cloning the wiki repo to local.

6. Fix 500 error when the name contains prefix spaces.

This PR also introduces the ability to support sub-directories, but it

can't be done at the moment due to there are a lot of legacy wiki data,

which use "%2F" in file names.

Co-authored-by: Giteabot <teabot@gitea.io>

This allows for usernames, and emails connected to them to be reserved

and not reused.

Use case, I manage an instance with open registration, and sometimes

when users are deleted for spam (or other purposes), their usernames are

freed up and they sign up again with the same information.

This could also be used to reserve usernames, and block them from being

registered (in case an instance would like to block certain things

without hardcoding the list in code and compiling from scratch).

This is an MVP, that will allow for future work where you can set

something as reserved via the interface.

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Close#24062

At the beginning, I just wanted to fix the warning mentioned by #24062

But, the cookie code really doesn't look good to me, so clean up them.

Complete the TODO on `SetCookie`:

> TODO: Copied from gitea.com/macaron/macaron and should be improved

after macaron removed.

In the `for` loop, the value of `membershipsToAdd[org]` and

`membershipsToRemove[org]` is a slice that should be appended instead of

overwritten.

Due to the current overwrite, the LDAP group sync only matches the last

group at the moment.

## Example reproduction

- an LDAP user is both a member of

`cn=admin_staff,ou=people,dc=planetexpress,dc=com` and

`cn=ship_crew,ou=people,dc=planetexpress,dc=com`.

- configuration of `Map LDAP groups to Organization teams ` in

`Authentication Sources`:

```json

{

"cn=admin_staff,ou=people,dc=planetexpress,dc=com":{

"test_organization":[

"admin_staff",

"test_add"

]

},

"cn=ship_crew,ou=people,dc=planetexpress,dc=com":{

"test_organization":[

"ship_crew"

]

}

```

- start `Synchronize external user data` task in the `Dashboard`.

- the user was only added for the team `test_organization.ship_crew`

None of the features of `unrolled/render` package is used.

The Golang builtin "html/template" just works well. Then we can improve

our HTML render to resolve the "$.root.locale.Tr" problem as much as

possible.

Next step: we can have a template render pool (by Clone), then we can

inject global functions with dynamic context to every `Execute` calls.

Then we can use `{{Locale.Tr ....}}` directly in all templates , no need

to pass the `$.root.locale` again and again.

Currently using the tip of main

(2c585d62a4) and when deleting a branch

(and presumably tag, but not tested), no workflows with `on: [delete]`

are being triggered. The runner isn't being notified about them. I see

this in the gitea log:

`2023/04/04 04:29:36 ...s/notifier_helper.go:102:Notify() [E] an error

occurred while executing the NotifyDeleteRef actions method:

gitRepo.GetCommit: object does not exist [id: test, rel_path: ]`

Understandably the ref has already been deleted and so `GetCommit`

fails. Currently at

https://github.com/go-gitea/gitea/blob/main/services/actions/notifier_helper.go#L130,

if the ref is an empty string it falls back to the default branch name.

This PR also checks if it is a `HookEventDelete` and does the same.

Currently `${{ github.ref }}` would be equivalent to the deleted branch

(if `notify()` succeded), but this PR allows `notify()` to proceed and

also aligns it with the GitHub Actions behavior at

https://docs.github.com/en/actions/using-workflows/events-that-trigger-workflows#delete:

`$GITHUB_REF` / `${{ github.ref }}` => Default branch (main/master)

`$GITHUB_SHA` / `${{ github.sha }}` => Last commit on default branch

If the user needs the name of the deleted branch (or tag), it is

available as `${{ github.event.ref }}`.

There appears to be no way for the user to get the tip commit SHA of the

deleted branch (GitHub does not do this either).

N.B. there may be other conditions other than `HookEventDelete` where

the default branch ref needs swapped in, but this was sufficient for my

use case.

Previously, deleted release attachments were kept forever on the

external storage.

Note: It may be very slow now if there are many attachments to be

deleted on this release.

Fix#23728

There is no fork concept in agit flow, anyone with read permission can

push `refs/for/<target-branch>/<topic-branch>` to the repo. So we should

treat it as a fork pull request because it may be from an untrusted

user.

When running listLdapGroupMemberships check if the groupFilter is empty

before using it to list memberships.

Fix#23615

Signed-off-by: Andrew Thornton <art27@cantab.net>

Adds API endpoints to manage issue/PR dependencies

* `GET /repos/{owner}/{repo}/issues/{index}/blocks` List issues that are

blocked by this issue

* `POST /repos/{owner}/{repo}/issues/{index}/blocks` Block the issue

given in the body by the issue in path

* `DELETE /repos/{owner}/{repo}/issues/{index}/blocks` Unblock the issue

given in the body by the issue in path

* `GET /repos/{owner}/{repo}/issues/{index}/dependencies` List an

issue's dependencies

* `POST /repos/{owner}/{repo}/issues/{index}/dependencies` Create a new

issue dependencies

* `DELETE /repos/{owner}/{repo}/issues/{index}/dependencies` Remove an

issue dependency

Closes https://github.com/go-gitea/gitea/issues/15393Closes#22115

Co-authored-by: Andrew Thornton <art27@cantab.net>

`HookEventType` of pull request review comments should be

`HookEventPullRequestReviewComment` but some event types are

`HookEventPullRequestComment` now.

Since #23493 has conflicts with latest commits, this PR is my proposal

for fixing #23371

Details are in the comments

And refactor the `modules/options` module, to make it always use

"filepath" to access local files.

Benefits:

* No need to do `util.CleanPath(strings.ReplaceAll(p, "\\", "/"))),

"/")` any more (not only one before)

* The function behaviors are clearly defined

Close#23440

Cause by #23189

In #23189, we should insert a comment record into db when pushing a

commit to the PR, even if the PR is closed.

But should skip sending any notification in this case.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

this is a simple endpoint that adds the ability to rename users to the

admin API.

Note: this is not in a mergeable state. It would be better if this was

handled by a PATCH/POST to the /api/v1/admin/users/{username} endpoint

and the username is modified.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

When creating attachments (issue, release, repo) the file size (being

part of the multipart file header) is passed through the chain of

creating an attachment to ensure the MinIO client can stream the file

directly instead of having to read it to memory completely at first.

Fixes#23393

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Fixes https://github.com/go-gitea/gitea/issues/22676

Context Data `IsOrganizationMember` and `IsOrganizationOwner` is used to

control the visibility of `people` and `team` tab.

2871ea0809/templates/org/menu.tmpl (L19-L40)

And because of the reuse of user projects page, User Context is changed

to Organization Context. But the value of `IsOrganizationMember` and

`IsOrganizationOwner` are not being given.

I reused func `HandleOrgAssignment` to add them to the ctx, but may have

some unnecessary variables, idk whether it is ok.

I found there is a missing `PageIsViewProjects` at create project page.

When there is an error creating a new openIDConnect authentication

source try to handle the error a little better.

Close#23283

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

When emails addresses are private, squash merges always use

`@noreply.localhost` for the author of the squash commit. And the author

is redundantly added as a co-author in the commit message.

Also without private mails, the redundant co-author is possible when

committing with a signature that's different than the user full name and

primary email.

Now try to find a commit by the same user in the list of commits, and

prefer the signature from that over one constructed from the account

settings.

When the base repository contains multiple branches with the same

commits as the base branch, pull requests can show a long list of

commits already in the base branch as having been added.

What this is supposed to do is exclude commits already in the base

branch. But the mechansim to do so assumed a commit only exists in a

single branch. Now use `git rev-list A B --not branchName` instead of

filtering commits afterwards.

The logic to detect if there was a force push also was wrong for

multiple branches. If the old commit existed in any branch in the base

repository it would assume there was no force push. Instead check if the

old commit is an ancestor of the new commit.

Follow #22568

* Remove unnecessary ToTrustedCmdArgs calls

* the FAQ in #22678

* Quote: When using ToTrustedCmdArgs, the code will be very complex (see

the changes for examples). Then developers and reviewers can know that

something might be unreasonable.

* The `signArg` couldn't be empty, it's either `-S{keyID}` or

`--no-gpg-sign`.

* Use `signKeyID` instead, add comment "empty for no-sign, non-empty to

sign"

* 5-line code could be extracted to a common `NewGitCommandCommit()` to

handle the `signKeyID`, but I think it's not a must, current code is

clear enough.

Related to: #22294#23186#23054

Replace: #23218

Some discussion is in the comments of #23218.

Highlights:

- Add Expiration for cache context. If a cache context has been used for

more than 10s, the cache data will be ignored, and warning logs will be

printed.

- Add `discard` field to `cacheContext`, a `cacheContext` with `discard`

true will drop all cached data and won't store any new one.

- Introduce `WithNoCacheContext`, if one wants to run long-life tasks,

but the parent context is a cache context,

`WithNoCacheContext(perentCtx)` will discard the cache data, so it will

be safe to keep the context for a long time.

- It will be fine to treat an original context as a cache context, like

`GetContextData(context.Backgraud())`, no warning logs will be printed.

Some cases about nesting:

When:

- *A*, *B* or *C* means a cache context.

- ~*A*~, ~*B*~ or ~*C*~ means a discard cache context.

- `ctx` means `context.Backgrand()`

- *A(ctx)* means a cache context with `ctx` as the parent context.

- *B(A(ctx))* means a cache context with `A(ctx)` as the parent context.

- `With` means `WithCacheContext`

- `WithNo` means `WithNoCacheContext`

So:

- `With(ctx)` -> *A(ctx)*

- `With(With(ctx))` -> *A(ctx)*, not *B(A(ctx))*

- `With(With(With(ctx)))` -> *A(ctx)*, not *C(B(A(ctx)))*

- `WithNo(ctx)` -> *ctx*, not *~A~(ctx)*

- `WithNo(With(ctx))` -> *~A~(ctx)*

- `WithNo(WithNo(With(ctx)))` -> *~A~(ctx)*, not *~B~(~A~(ctx))*

- `With(WithNo(With(ctx)))` -> *B(~A~(ctx))*

- `WithNo(With(WithNo(With(ctx))))` -> *~B~(~A~(ctx))*

- `With(WithNo(With(WithNo(With(ctx)))))` -> *C(~B~(~A~(ctx)))*

The merge and update branch code was previously a little tangled and had

some very long functions. The functions were not very clear in their

reasoning and there were deficiencies in their logging and at least one

bug in the handling of LFS for update by rebase.

This PR substantially refactors this code and splits things out to into

separate functions. It also attempts to tidy up the calls by wrapping

things in "context"s. There are also attempts to improve logging when

there are errors.

Signed-off-by: Andrew Thornton <art27@cantab.net>

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Co-authored-by: delvh <dev.lh@web.de>

Close#23241

Before: press Ctrl+Enter in the Code Review Form, a single comment will

be added.

After: press Ctrl+Enter in the Code Review Form, start the review with

pending comments.

The old name `is_review` is not clear, so the new code use

`pending_review` as the new name.

Co-authored-by: delvh <leon@kske.dev>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

When creating commit status for Actons jobs, a payload with nil

`HeadCommit` will cause panic.

Reported at:

https://gitea.com/gitea/act_runner/issues/28#issuecomment-732166

Although the `HeadCommit` probably can not be nil after #23215,

`CreateCommitStatus` should protect itself, to avoid being broken in the

future.

In addition, it's enough to print error log instead of returning err

when `CreateCommitStatus` failed.

---------

Co-authored-by: delvh <dev.lh@web.de>

Extract from #11669 and enhancement to #22585 to support exclusive

scoped labels in label templates

* Move label template functionality to label module

* Fix handling of color codes

* Add Advanced label template

When fetching remotes for conflict checking, skip unnecessary and

potentially slow writing of commit graphs.

In a test with the Blender repository, this reduces conflict checking

time for one pull request from about 2s to 0.1s.

Fix: #22990

---

Before, the return value of the api is always false,regrardless of

whether the entry of `sync_on_commit` is true or false.

I have confirmed that the value of `sync_on_commit` dropped into the

database is correct.

So, I think it is enough to make some small changes.

minio/sha256-simd provides additional acceleration for SHA256 using

AVX512, SHA Extensions for x86 and ARM64 for ARM.

It provides a drop-in replacement for crypto/sha256 and if the

extensions are not available it falls back to standard crypto/sha256.

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Close #23027

`git commit` message option _only_ supports 4 formats (well, only ....):

* `"commit", "-m", msg`

* `"commit", "-m{msg}"` (no space)

* `"commit", "--message", msg`

* `"commit", "--message={msg}"`

The long format with `=` is the best choice, and it's documented in `man

git-commit`:

`-m <msg>, --message=<msg> ...`

ps: I would suggest always use long format option for git command, as

much as possible.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Ensure that issue pullrequests are loaded before trying to set the

self-reference.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <leon@kske.dev>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Some bugs caused by less unit tests in fundamental packages. This PR

refactor `setting` package so that create a unit test will be easier

than before.

- All `LoadFromXXX` files has been splited as two functions, one is

`InitProviderFromXXX` and `LoadCommonSettings`. The first functions will

only include the code to create or new a ini file. The second function

will load common settings.

- It also renames all functions in setting from `newXXXService` to

`loadXXXSetting` or `loadXXXFrom` to make the function name less

confusing.

- Move `XORMLog` to `SQLLog` because it's a better name for that.

Maybe we should finally move these `loadXXXSetting` into the `XXXInit`

function? Any idea?

---------

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: delvh <dev.lh@web.de>

Add a new "exclusive" option per label. This makes it so that when the

label is named `scope/name`, no other label with the same `scope/`

prefix can be set on an issue.

The scope is determined by the last occurence of `/`, so for example

`scope/alpha/name` and `scope/beta/name` are considered to be in

different scopes and can coexist.

Exclusive scopes are not enforced by any database rules, however they

are enforced when editing labels at the models level, automatically

removing any existing labels in the same scope when either attaching a

new label or replacing all labels.

In menus use a circle instead of checkbox to indicate they function as

radio buttons per scope. Issue filtering by label ensures that only a

single scoped label is selected at a time. Clicking with alt key can be

used to remove a scoped label, both when editing individual issues and

batch editing.

Label rendering refactor for consistency and code simplification:

* Labels now consistently have the same shape, emojis and tooltips

everywhere. This includes the label list and label assignment menus.

* In label list, show description below label same as label menus.

* Don't use exactly black/white text colors to look a bit nicer.

* Simplify text color computation. There is no point computing luminance

in linear color space, as this is a perceptual problem and sRGB is

closer to perceptually linear.

* Increase height of label assignment menus to show more labels. Showing

only 3-4 labels at a time leads to a lot of scrolling.

* Render all labels with a new RenderLabel template helper function.

Label creation and editing in multiline modal menu:

* Change label creation to open a modal menu like label editing.

* Change menu layout to place name, description and colors on separate

lines.

* Don't color cancel button red in label editing modal menu.

* Align text to the left in model menu for better readability and

consistent with settings layout elsewhere.

Custom exclusive scoped label rendering:

* Display scoped label prefix and suffix with slightly darker and

lighter background color respectively, and a slanted edge between them

similar to the `/` symbol.

* In menus exclusive labels are grouped with a divider line.

---------

Co-authored-by: Yarden Shoham <hrsi88@gmail.com>

Co-authored-by: Lauris BH <lauris@nix.lv>

To avoid duplicated load of the same data in an HTTP request, we can set

a context cache to do that. i.e. Some pages may load a user from a

database with the same id in different areas on the same page. But the

code is hidden in two different deep logic. How should we share the

user? As a result of this PR, now if both entry functions accept

`context.Context` as the first parameter and we just need to refactor

`GetUserByID` to reuse the user from the context cache. Then it will not

be loaded twice on an HTTP request.

But of course, sometimes we would like to reload an object from the

database, that's why `RemoveContextData` is also exposed.

The core context cache is here. It defines a new context

```go

type cacheContext struct {

ctx context.Context

data map[any]map[any]any

lock sync.RWMutex

}

var cacheContextKey = struct{}{}

func WithCacheContext(ctx context.Context) context.Context {

return context.WithValue(ctx, cacheContextKey, &cacheContext{

ctx: ctx,

data: make(map[any]map[any]any),

})

}

```

Then you can use the below 4 methods to read/write/del the data within

the same context.

```go

func GetContextData(ctx context.Context, tp, key any) any

func SetContextData(ctx context.Context, tp, key, value any)

func RemoveContextData(ctx context.Context, tp, key any)

func GetWithContextCache[T any](ctx context.Context, cacheGroupKey string, cacheTargetID any, f func() (T, error)) (T, error)

```

Then let's take a look at how `system.GetString` implement it.

```go

func GetSetting(ctx context.Context, key string) (string, error) {

return cache.GetWithContextCache(ctx, contextCacheKey, key, func() (string, error) {

return cache.GetString(genSettingCacheKey(key), func() (string, error) {

res, err := GetSettingNoCache(ctx, key)

if err != nil {

return "", err

}

return res.SettingValue, nil

})

})

}

```

First, it will check if context data include the setting object with the

key. If not, it will query from the global cache which may be memory or

a Redis cache. If not, it will get the object from the database. In the

end, if the object gets from the global cache or database, it will be

set into the context cache.

An object stored in the context cache will only be destroyed after the

context disappeared.